Your Hierarchical attention network github images are ready in this website. Hierarchical attention network github are a topic that is being searched for and liked by netizens now. You can Find and Download the Hierarchical attention network github files here. Download all royalty-free photos and vectors.

If you’re looking for hierarchical attention network github images information linked to the hierarchical attention network github interest, you have pay a visit to the ideal site. Our website frequently gives you suggestions for viewing the highest quality video and picture content, please kindly search and find more informative video content and graphics that match your interests.

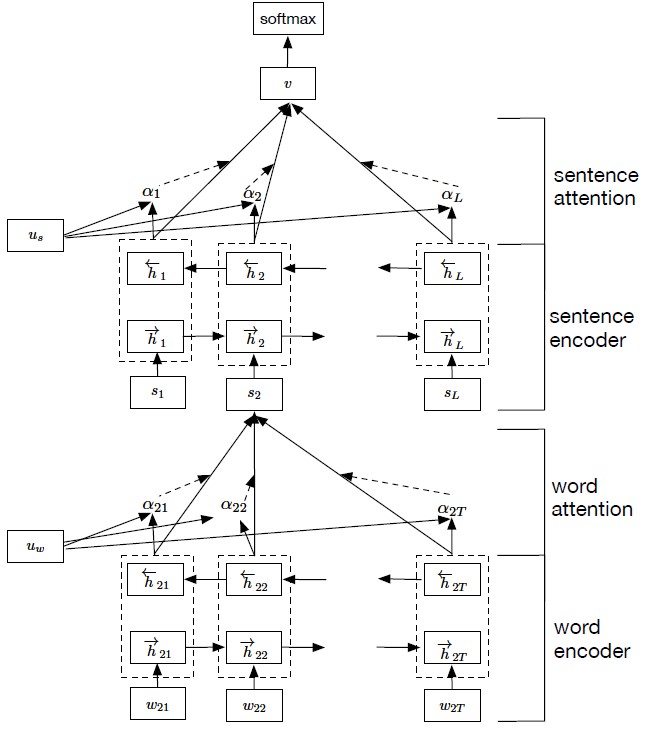

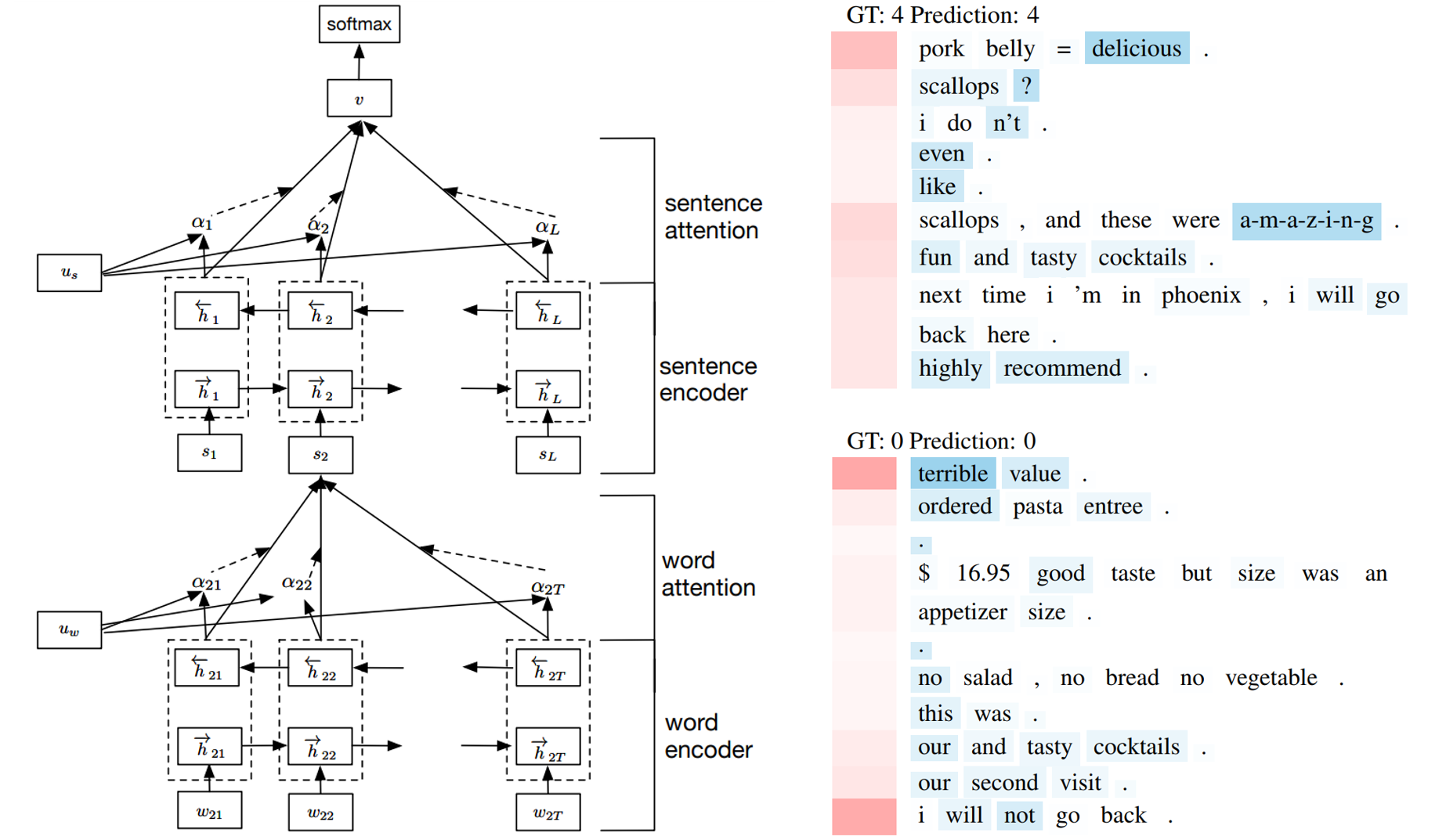

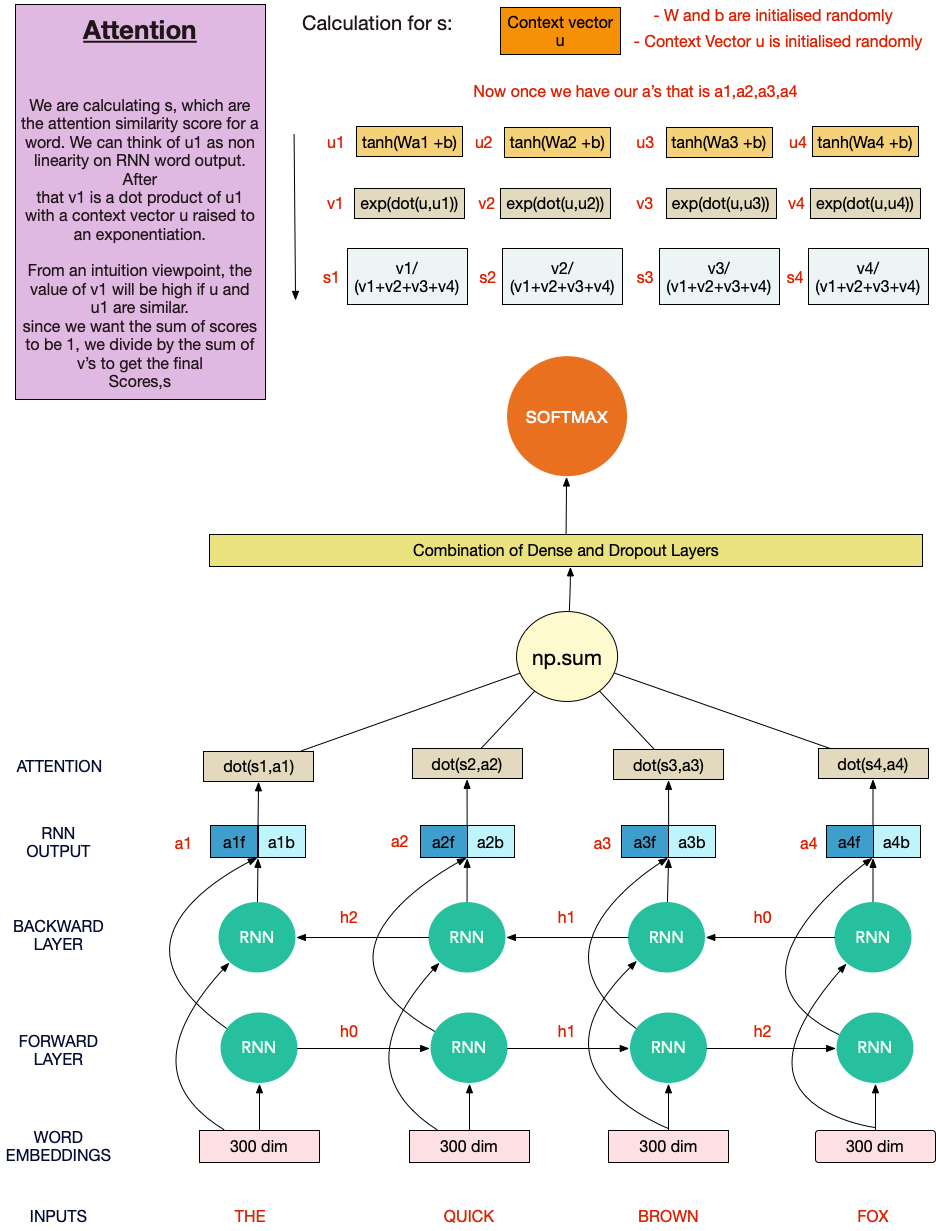

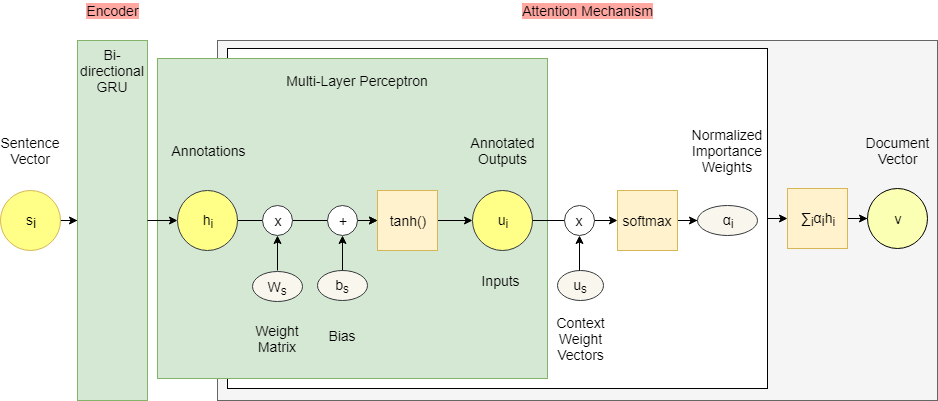

Hierarchical Attention Network Github. As words form sentences and sentences form the document the Hierarchical Attention Network s representation of the document uses this hierarchy in order to determine. I admit that we could still train HAN model without any pre-trained word2vec model. GitHub is where people build software. Hierarchical-attention-network - github repositories search result.

Pin Op News Office 365 Azure And Sharepoint From in.pinterest.com

Pin Op News Office 365 Azure And Sharepoint From in.pinterest.com

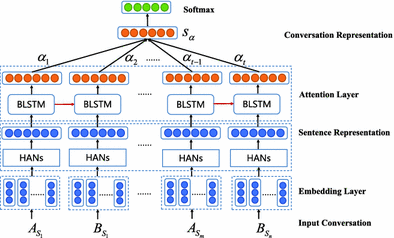

As words form sentences and sentences form the document the Hierarchical Attention Network s representation of the document uses this hierarchy in order to determine. A Hierarchical Graph Attention Network for Stock Movement Prediction. Hierarchical-attention-networks - github repositories search result. Bi-directional Attention Flow BiDAF network is a multi-stage hierarchical process that represents. Figure 1 gives an overview frame-work of our reinforcement-learning-guided comment gen-eration approach via two-layer attention network which includes an offline training stage and an online testing. Repositories Issues Users close.

The one level LSTM attention and Hierarchical attention network can only achieve 65 while BiLSTM achieves roughly 64.

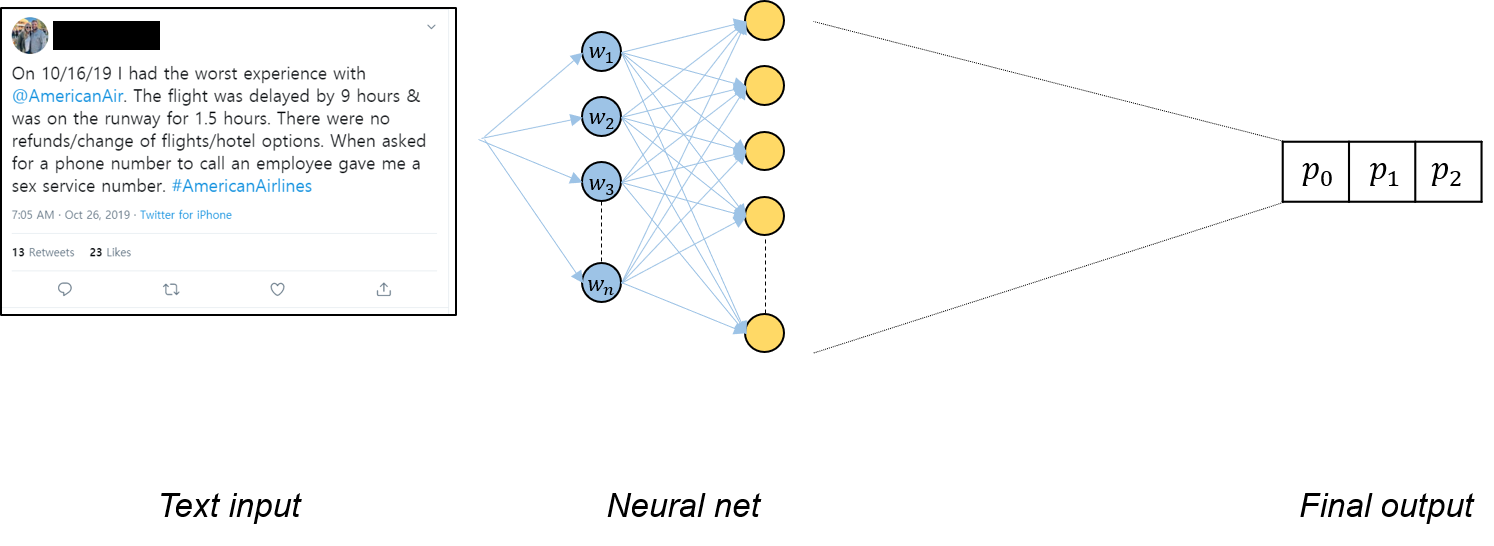

This hierarchical attention network assigns weights pays attention to individual tokens and statements regarding different code representations. More than 65 million people use GitHub to discover fork and contribute to over 200 million projects. The format becomes label tt sentence1 t sentence2. Best match Most stars Most forks Recently updated Fewest stars Fewest forks Least recently updated. The one level LSTM attention and Hierarchical attention network can only achieve 65 while BiLSTM achieves roughly 64. Document classification with Hierarchical Attention Networks in TensorFlow.

Source: github.com

Source: github.com

GitHub is where people build software. My implementation for Hierarchical Attention Networks for Document Classification Yang et al. Run HANipynb to train the model. Updated 1 month ago. Repositories Issues Users close.

Source: pinterest.com

Source: pinterest.com

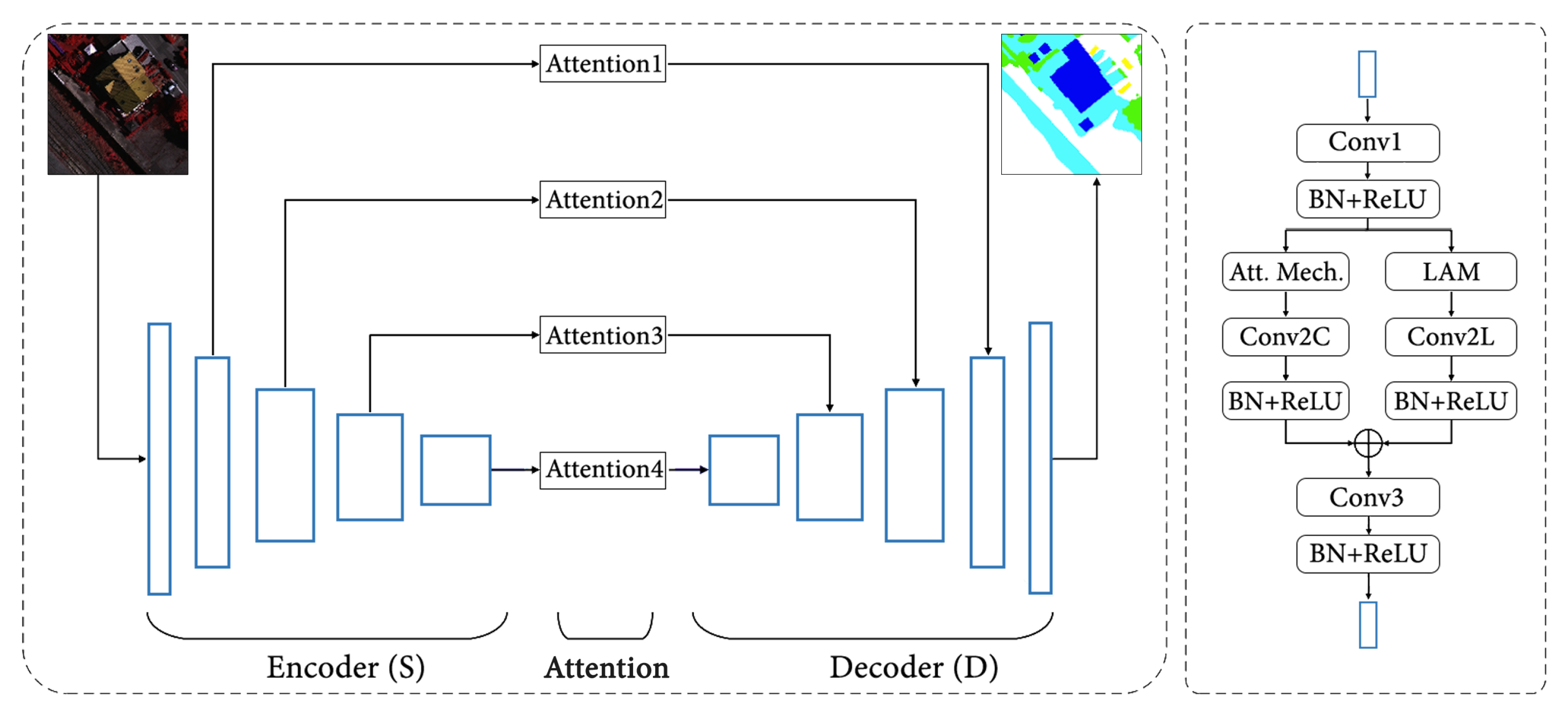

Specifically we employ spatial and channel-wise attention to integrate appearance cues and pyramidal features in a novel fashion. MIT License Updated 5 days ago. A Hierarchical Graph Attention Network for Stock Movement Prediction. My implementation for Hierarchical Attention Networks for Document Classification Yang et al. The Hierarchical Attention Network is a novel deep learning architecture that takes advantage of the hierarchical structure of documents to construct a detailed representation of the document.

Source: spiedigitallibrary.org

Source: spiedigitallibrary.org

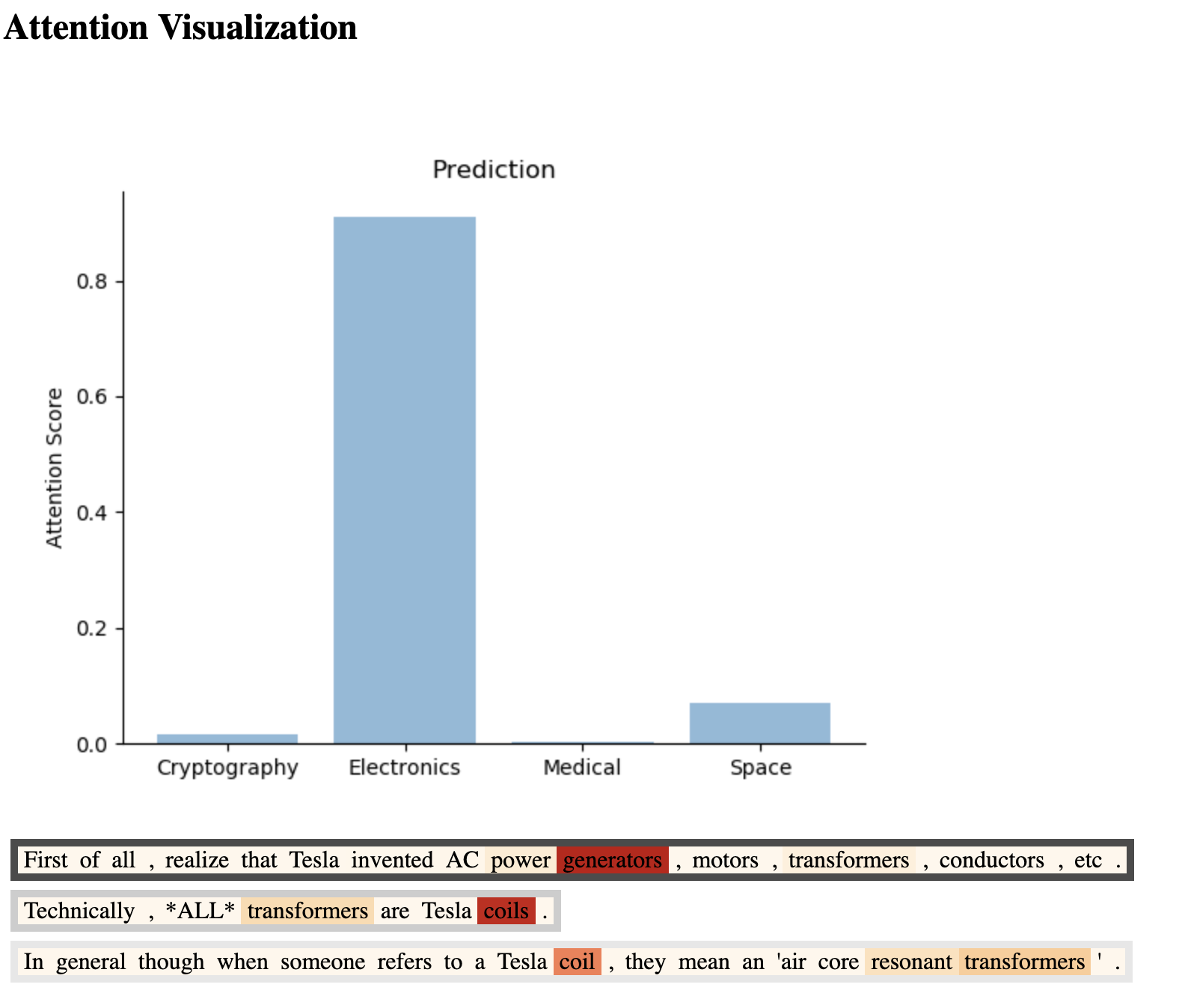

We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification. Text Classification Part 3 - Hierarchical attention network. Hierarchical-attention-networks - github repositories search result. GitHub is where people build software.

Source: humboldt-wi.github.io

Source: humboldt-wi.github.io

Im very thankful to Keras which make building this project painless. API for loading text data. In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality. Hierarchical-attention-network - github repositories search result. Multilingual hierarchical attention networks toolkit.

Updated 1 month ago. Im very thankful to Keras which make building this project painless. 2016 Run yelp-preprocessipynb to preprocess the data. Pytorch implementation of Hierarchical Attention-Based Recurrent Highway Networks for Time Series Pr. After the exercise of building convolutional RNN sentence level attention RNN finally I have come to implement Hierarchical Attention Networks for Document Classification.

Source: sciencedirect.com

Source: sciencedirect.com

Hierarchical Attention Networks a PyTorch Tutorial to Text Classification. We propose an end-to-end Hierarchical Attention Matting Network HAttMatting which can predict the better struc-ture of alpha mattes from single RGB images without addi-tional input. Text Classification Part 3 - Hierarchical attention network. This hierarchical attention network assigns weights pays attention to individual tokens and statements regarding different code representations. Document classification with Hierarchical Attention Networks in TensorFlow.

![]() Source: pinterest.com

Source: pinterest.com

Updated 1 month ago. Hierarchical Attention Network readed in 201710 by szx Task Instruction. Hierarchical Attention Networks for Document Classification. Best match Most stars Most forks Recently updated Fewest stars Fewest forks Least recently updated. This blended attention mech-.

Source: pinterest.com

Source: pinterest.com

This hierarchical attention network assigns weights pays attention to individual tokens and statements regarding different code representations. Hierarchical Attention Network HAN HAN was proposed by Yang et al. We know that documents have a hierarchical structure words combine to form sentences and sentences combine to form documents. This blended attention mech-. In all HAN github repositories I have seen so far a default embedding layer was used without loading pre-trained word2vec model.

Source: humboldt-wi.github.io

Source: humboldt-wi.github.io

The format becomes label tt sentence1 t sentence2. Hierarchical-attention-network - github repositories search result. Hierarchical Attention Network HAN HAN was proposed by Yang et al. Form a hierarchical attention network. However to the best of my knowledge at least in pytorch there is no implementation on github using it.

Source: pinterest.com

Source: pinterest.com

Repositories Issues Users close. Lets examine what they mean and how such. In the middle layer the user-guided inter-attention mechanism for cross-modal attention is developed. Hierarchical-attention-networks - github repositories search result. We propose an end-to-end Hierarchical Attention Matting Network HAttMatting which can predict the better struc-ture of alpha mattes from single RGB images without addi-tional input.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

Hierarchical Attention Networks for Document Classification. The custom layer is very powerful and flexible to build your. More than 65 million people use GitHub to discover fork and contribute to over 200 million projects. However to serve the purpose of re. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models.

Source: awesomeopensource.com

Source: awesomeopensource.com

Reproducing Yang et al Hierarchical Attention Networks for Document. I felt there could be some major improvement in. However I didnt follow exactly authors text preprocessing. A Hierarchical Graph Attention Network for Stock Movement Prediction. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models.

Run HANipynb to train the model. The Hierarchical Attention Network is a novel deep learning architecture that takes advantage of the hierarchical structure of documents to construct a detailed representation of the document. 2016 Run yelp-preprocessipynb to preprocess the data. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. Repositories Issues Users close.

Source: kaggle.com

Source: kaggle.com

Hierarchical-attention-network - github repositories search result. The Hierarchical Attention Network is a novel deep learning architecture that takes advantage of the hierarchical structure of documents to construct a detailed representation of the document. 2016 Run yelp-preprocessipynb to preprocess the data. Hierarchical-attention-networks - github repositories search result. Hierarchical-attention-network - github repositories search result.

Source: link.springer.com

Source: link.springer.com

However to serve the purpose of re. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification. Hierarchical Attention Network HAN HAN was proposed by Yang et al. Hier sollte eine Beschreibung angezeigt werden diese Seite lässt dies jedoch nicht zu. Hierarchical Attention Networks for Document Classification.

Source: humboldt-wi.github.io

Source: humboldt-wi.github.io

My implementation for Hierarchical Attention Networks for Document Classification Yang et al. After the exercise of building convolutional RNN sentence level attention RNN finally I have come to implement Hierarchical Attention Networks for Document Classification. Multilingual hierarchical attention networks toolkit. Form a hierarchical attention network. In the middle layer the user-guided inter-attention mechanism for cross-modal attention is developed.

Source: mdpi.com

Source: mdpi.com

However I didnt follow exactly authors text preprocessing. Hierarchical-attention-network - github repositories search result. More than 65 million people use GitHub to discover fork and contribute to over 200 million projects. GNU General Public License v30 Updated 3 months ago. Hierarchical Attention Networks for Document Classification.

Source: in.pinterest.com

Source: in.pinterest.com

GitHub is where people build software. We can try to learn that structure or we can input this hierarchical structure into the model and see if it improves the performance of existing models. I am still using Keras data preprocessing logic that takes top 20000 or 50000 tokens skip the rest and pad remaining with 0. Specifically we employ spatial and channel-wise attention to integrate appearance cues and pyramidal features in a novel fashion. A Hierarchical Graph Attention Network for Stock Movement Prediction.

This site is an open community for users to do submittion their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site convienient, please support us by sharing this posts to your preference social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title hierarchical attention network github by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.