Your Hierarchical attention mechanism images are available. Hierarchical attention mechanism are a topic that is being searched for and liked by netizens today. You can Get the Hierarchical attention mechanism files here. Download all royalty-free photos.

If you’re looking for hierarchical attention mechanism images information linked to the hierarchical attention mechanism interest, you have come to the ideal site. Our site always provides you with hints for refferencing the highest quality video and image content, please kindly surf and find more enlightening video articles and images that fit your interests.

Hierarchical Attention Mechanism. The HCRF-AM model consists of an Attention Mechanism AM module and an Image Classification IC module. Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. In this paper we argue that the position-aware representations are beneficial to this task. The attention flow is organized in the fol- lowing hierarchical order.

Hierarchical Lstms With Adaptive Attention For Visual Captioning From computer.org

Hierarchical Lstms With Adaptive Attention For Visual Captioning From computer.org

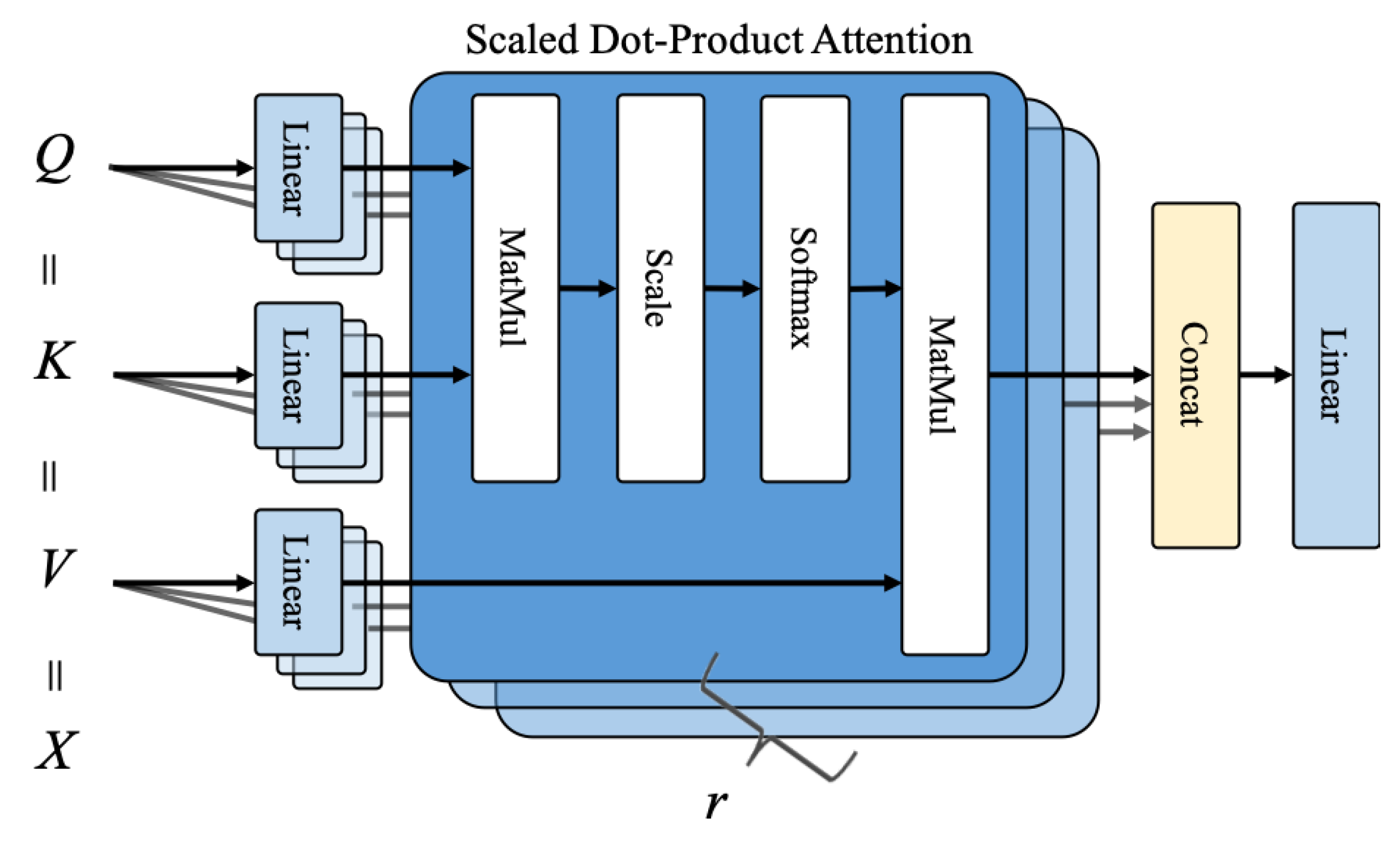

We apply the multi-head hierarchical attention mechanism to centrally computed critics so critics can process the received information more accurately and assist actors in choosing better actions. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. 2 implementing AM to explicitly. In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality. In this paper to accomplish the tasks of GHIC superiorly and assist pathologists in clinical diagnosis an intelligent Hierarchical Conditional Random Field based Attention Mechanism HCRF-AM model is proposed. In this paper we argue that the position-aware representations are beneficial to this task.

In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality.

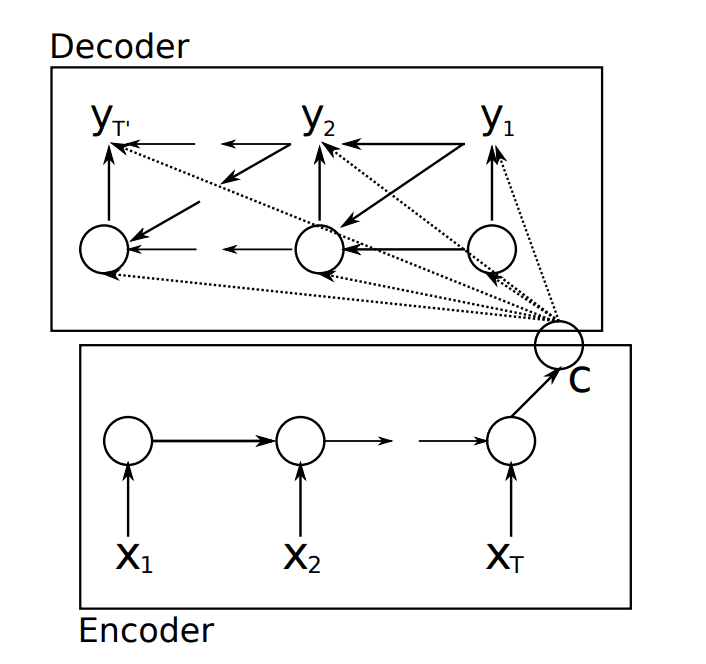

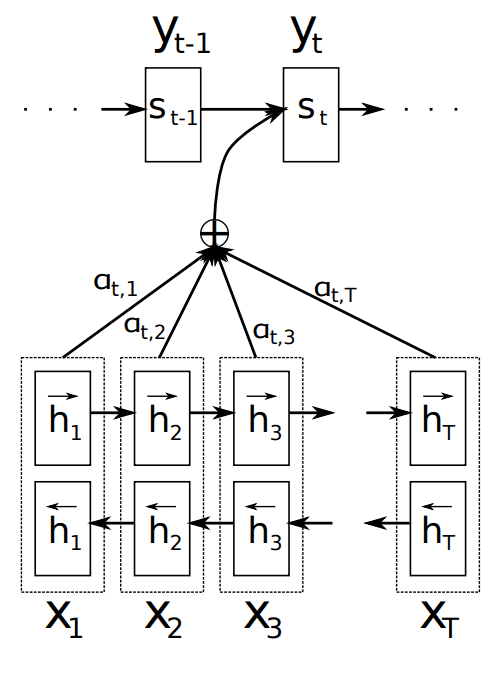

In 2014 Bahdanau et al. We describe the de-tails of different components in the following sec-tions. It improves the extensibility of our model and consistency with practice. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical natureof text data and 2 attention mechanism is adapted for document classification. A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer. The attention mechanism is originally proposed referring to human visual focus to acquire information and achieves an appealing performance in image recognition.

Source: pinterest.com

Source: pinterest.com

2 implementing AM to explicitly. 2 where t is a non-linear activation function tanh in our case. In this paper we argue that the position-aware representations are beneficial to this task. In this paper to accomplish the tasks of GHIC superiorly and assist pathologists in clinical diagnosis an intelligent Hierarchical Conditional Random Field based Attention Mechanism HCRF-AM model is proposed. Then the word-level attention layer builds the question-aware passage sentence and the candidate option representation.

Source: machinelearningmastery.com

Source: machinelearningmastery.com

In the AM module an HCRF. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. Hierarchical Attention Network HAN HAN was proposed by Yang et alin 2016. The hierarchical attention mechanism. In another study based on ICU data feature-level attention was used rather than attention on embeddings.

Source: computer.org

Source: computer.org

Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. A low-level feature spatial attention module LFSAM is developed to learn the spatial relationship between different pixels on each channel in the low-level stage of the encoder a high-level feature channel attention module. We describe the de-tails of different components in the following sec-tions. They are designed to assign different weights to friends. We apply the multi-head hierarchical attention mechanism to centrally computed critics so critics can process the received information more accurately and assist actors in choosing better actions.

Source: pinterest.com

Source: pinterest.com

The proposed hierarchical attention mechanism fully exploits relevant contexts for the feature learning and the weights of new features can be trained in the same way. A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer. The importance of a unit is thus measured as the similarity of uj to the con-text vector ug jointly learned during the training process. A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer. We apply the multi-head hierarchical attention mechanism to centrally computed critics so critics can process the received information more accurately and assist actors in choosing better actions.

Source: pinterest.com

Source: pinterest.com

The attention mechanism is originally proposed referring to human visual focus to acquire information and achieves an appealing performance in image recognition. Therefore we propose a. In the AM module an HCRF. To the best of our knowledge we are the first to jointly capture relevant information from both low- and high-order feature. In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality.

Source: in.pinterest.com

Source: in.pinterest.com

The importance of a unit is thus measured as the similarity of uj to the con-text vector ug jointly learned during the training process. Form a hierarchical attention network. That is to say the hierarchical attention mechanism and the. Therefore we propose a. J exp u j u g P J k 1 expu k ug.

Source: machinelearningmastery.com

Source: machinelearningmastery.com

2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. Their framework is one of the earlier attempts to apply attention to other problems than neural machine translation. In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality. The proposed hierarchical attention mechanism fully exploits relevant contexts for the feature learning and the weights of new features can be trained in the same way. The importance of a unit is thus measured as the similarity of uj to the con-text vector ug jointly learned during the training process.

Source: in.pinterest.com

Source: in.pinterest.com

In addition most existing methods ignore the position information of the aspect when encoding the sentence. Further we introduce a hierarchical attention mechanism into our segmentation framework. A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer. In this paper to accomplish the tasks of GHIC superiorly and assist pathologists in clinical diagnosis an intelligent Hierarchical Conditional Random Field based Attention Mechanism HCRF-AM model is proposed. In the bottom layer the user-guided intra-attention mechanism with a personalized multi-modal embedding correlation scheme is proposed to learn effective embedding for each modality.

Source: pinterest.com

Source: pinterest.com

It con-sists of several parts. The attention flow is organized in the fol- lowing hierarchical order. This shows that the hierarchical attention mechanism considers the single feature the combination features and the overall features to improve the utilization of structured data and it also shows that extracting User-POI matching degree from text can indeed mine more implicit information of unstructured data. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. More recently there has been a growing in-terest in incorporating the attention mechanism into encod-ingrelationshipsofneighboringnodesandpromotingnode-embedding learning solutions 37 35.

Source: pinterest.com

Source: pinterest.com

The importance of a unit is thus measured as the similarity of uj to the con-text vector ug jointly learned during the training process. To tackle such issues we propose a deep neural networks-based model with spatiotemporal hierarchical attention mechanisms called ST-HAttn for short for Ms-SLCFP. It con-sists of several parts. J exp u j u g P J k 1 expu k ug. Our model consists of two layers of attention neural networks the first attention layer learns the influence weights of members when the group.

Source: computer.org

Source: computer.org

To this end this paper proposes a new novel model for Group Recommendation using Hierarchical Attention Mechanism GRHAM which can dynamically adjust the weight of members in group decision-making. Therefore we propose a. The attention flow is organized in the fol- lowing hierarchical order. 2016 demonstrated with their hierarchical attention network HAN that attention can be effectively used on various levels. To tackle such issues we propose a deep neural networks-based model with spatiotemporal hierarchical attention mechanisms called ST-HAttn for short for Ms-SLCFP.

Source: mdpi.com

Source: mdpi.com

More recently there has been a growing in-terest in incorporating the attention mechanism into encod-ingrelationshipsofneighboringnodesandpromotingnode-embedding learning solutions 37 35. Our model consists of two layers of attention neural networks the first attention layer learns the influence weights of members when the group. The mechanism is divided into three parts. This shows that the hierarchical attention mechanism considers the single feature the combination features and the overall features to improve the utilization of structured data and it also shows that extracting User-POI matching degree from text can indeed mine more implicit information of unstructured data. That is to say the hierarchical attention mechanism and the.

Source: paperswithcode.com

Source: paperswithcode.com

Their framework is one of the earlier attempts to apply attention to other problems than neural machine translation. The HCRF-AM model consists of an Attention Mechanism AM module and an Image Classification IC module. We use a bi-directional recurrent neuralnetworkBiRNNtoencodepassagesentencesques- tion and candidate options separately. It con-sists of several parts. In this paper to accomplish the tasks of GHIC superiorly and assist pathologists in clinical diagnosis an intelligent Hierarchical Conditional Random Field based Attention Mechanism HCRF-AM model is proposed.

Source: computer.org

Source: computer.org

In speech recognition attention aligns characters and audio. Our model consists of two layers of attention neural networks the first attention layer learns the influence weights of members when the group. More recently there has been a growing in-terest in incorporating the attention mechanism into encod-ingrelationshipsofneighboringnodesandpromotingnode-embedding learning solutions 37 35. 2 implementing AM to explicitly. We use a bi-directional recurrent neuralnetworkBiRNNtoencodepassagesentencesques- tion and candidate options separately.

Source: computer.org

Source: computer.org

We use a bi-directional recurrent neuralnetworkBiRNNtoencodepassagesentencesques- tion and candidate options separately. A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer. The hierarchical attention mechanism. The proposed hierarchical attention mechanism fully exploits relevant contexts for the feature learning and the weights of new features can be trained in the same way. Form a hierarchical attention network.

Source: blogs.rstudio.com

Source: blogs.rstudio.com

Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical natureof text data and 2 attention mechanism is adapted for document classification. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. Keras attention hierarchical-attention-networks Updated on Apr 11 2019 Python wslc1314 TextSentimentClassification. In the AM module an HCRF. The hierarchical attention critic adopts a bi-level attention structure which is composed of the agent-level and the group-level.

Source: pinterest.com

Source: pinterest.com

J exp u j u g P J k 1 expu k ug. In this paper we argue that the position-aware representations are beneficial to this task. We apply the multi-head hierarchical attention mechanism to centrally computed critics so critics can process the received information more accurately and assist actors in choosing better actions. In this paper to accomplish the tasks of GHIC superiorly and assist pathologists in clinical diagnosis an intelligent Hierarchical Conditional Random Field based Attention Mechanism HCRF-AM model is proposed. This shows that the hierarchical attention mechanism considers the single feature the combination features and the overall features to improve the utilization of structured data and it also shows that extracting User-POI matching degree from text can indeed mine more implicit information of unstructured data.

Source: pinterest.com

Source: pinterest.com

The attention mechanism is originally proposed referring to human visual focus to acquire information and achieves an appealing performance in image recognition. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. In addition most existing methods ignore the position information of the aspect when encoding the sentence. 2016 demonstrated with their hierarchical attention network HAN that attention can be effectively used on various levels. The notable contributions are that ST-HAttn performs attention mechanisms AM in two ways.

This site is an open community for users to do sharing their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site serviceableness, please support us by sharing this posts to your favorite social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title hierarchical attention mechanism by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.