Your Hierarchical attention layer images are available. Hierarchical attention layer are a topic that is being searched for and liked by netizens today. You can Get the Hierarchical attention layer files here. Download all royalty-free vectors.

If you’re looking for hierarchical attention layer pictures information linked to the hierarchical attention layer topic, you have pay a visit to the right blog. Our site always provides you with hints for refferencing the highest quality video and image content, please kindly search and find more enlightening video content and images that fit your interests.

Hierarchical Attention Layer. Hierarchical Attention Network HAN To learn the context-based features the architecture of the HAN classifier has an embedding layer encoders and attention layers. It consists of three components. Here a hierarchical attention strat-egy is proposed to capture the associations between texts and the hierarchical structure. If one chooses to use BERT in order to create sentence embedding for each sentence then the.

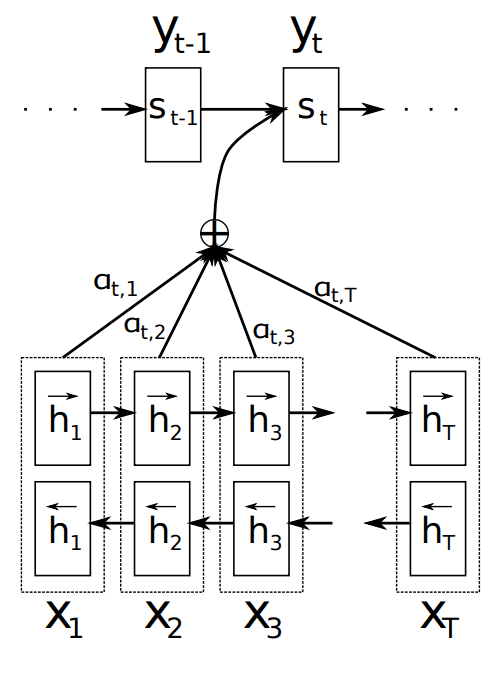

Attention In Neural Networks 1 Introduction To Attention Mechanism Buomsoo Kim From buomsoo-kim.github.io

Attention In Neural Networks 1 Introduction To Attention Mechanism Buomsoo Kim From buomsoo-kim.github.io

We present an eval-. Hierarchical Attention Network HAN HAN was proposed by Yang et al. Keras Layer that implements an Attention mechanism with a contextquery vector for temporal data. First we propose a pyramid diverse attention as shown in Fig. In addition a projective bilinear function is designed in meaningful second-order interaction encoder to effectively learn more fine-grained and comprehensive second-order feature interactions. I am trying to create hierarchical attention in TensorFlow 20 using the AdditiveAttention Keras layer.

A word sequence encoder a word-level attention layer a sentence encoder and a sentence-level attention layer.

We describe the de-tails of different components in the following sec-tions. The attentive process in each layer determines whether to pass or suppress feature maps for use in the next convolution. The bi-directional GRU with attention layer is then used to. It con-sists of several parts. A Bi-LSTM layer a multi-task driven inter-attention layer and an output layer. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig.

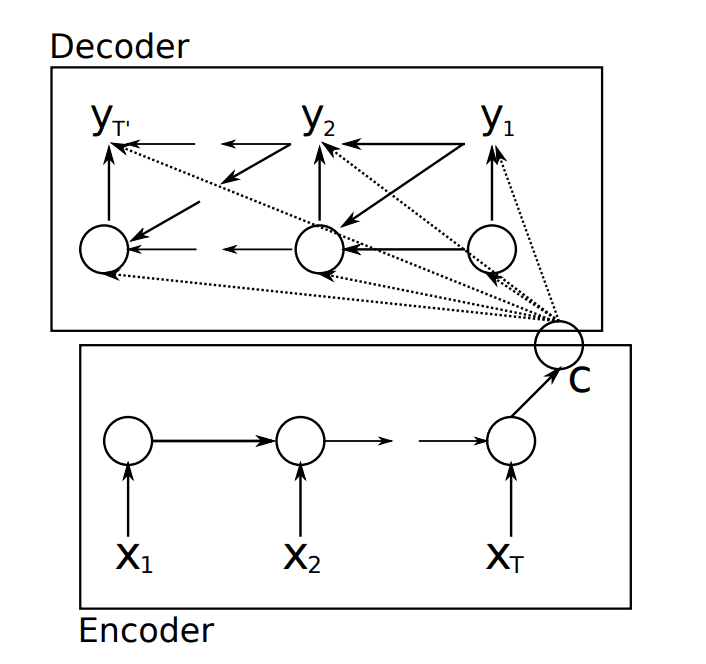

Source: machinelearningmastery.com

Source: machinelearningmastery.com

At node level a structure-preserving attention is developed to preserve structure features of each node in the neighborhood subgraph. I am trying to create hierarchical attention in TensorFlow 20 using the AdditiveAttention Keras layer. Follows the work of Yang et al. Although the highway networks allow unimpeded information flow across layers the information stored in different layers captures temporal dynamics at different levels and will thus have impact on predicting future behaviors of. 31 Task Specic Encoder.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

Speci cally the rst attention layer learns user long-term preferences based on the historical purchased item representation while the second one outputs nal user representation. It consists of three components. Following the paper Hierarchical Attention Networks for Document Classification. Speci cally the rst attention layer learns user long-term preferences based on the historical purchased item representation while the second one outputs nal user representation. Word level bi-directional GRU to get rich representation of words Word Attentionword level attention to get important information in a sentence Sentence Encoder.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

Then we develop an hierarchical attention-based recurrent layer to model the dependencies among different levels of the hierarchical structure in a top-down fashion. Sentence level bi-directional GRU to get. Next we will describe the. In addition a projective bilinear function is designed in meaningful second-order interaction encoder to effectively learn more fine-grained and comprehensive second-order feature interactions. Import keras import Attention from kerasenginetopology import Layer Input from keras import backend as K from keras import initializers Hierarchical Attention Layer Implementation Implemented by Arkadipta De MIT Licensed class Hierarchical_AttentionLayer.

Source: machinelearningmastery.com

Source: machinelearningmastery.com

A Bi-LSTM layer a multi-task driven inter-attention layer and an output layer. Specifically the hierarchical layer includes l levels each of which consists of two symmetrical calculations. At node level a structure-preserving attention is developed to preserve structure features of each node in the neighborhood subgraph. Speci cally the rst attention layer learns user long-term preferences based on the historical purchased item representation while the second one outputs nal user representation. Finally we design a hybrid method which is capable of predicting the categories of.

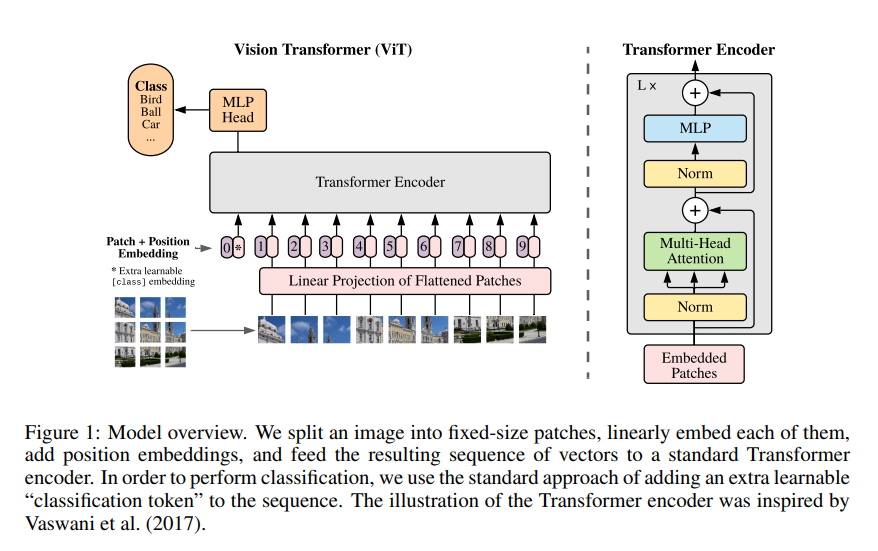

Source: computer.org

Source: computer.org

Finally we design a hybrid method which is capable of predicting the categories of. Hierarchical attention mechanism. The coarsening operation and the refining operation. The Bi-LSTM and attention layers are applied in both word and sentence levels. In this paper we propose a few variations of the Hierarchical Attention Network HAN that directly incorporate the pre-defined hierarchical structure of the output label space into the network structure via the use of hierarchical output layers representing different levels of the output labels hierarchy.

2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. This repository is an implementation of the article Hierarchical Attention Networks for Document Classification Yang et al such that one can choose if to use a traditional BiLSTM for creating sentence embeddings for each sentence or to use BERT for this task configurable. If one chooses to use BERT in order to create sentence embedding for each sentence then the. Httpswwwcscmuedudiyiydocsnaacl16pdf Hierarchical Attention Networks for Document Classification GitHub Instantly share code notes and snippets. We employed GAT as an input layer combined with a multi-head attention mechanism.

Source: dl.acm.org

Source: dl.acm.org

Paper we propose a novel two-layer hierarchical attention network which takes the above proper-ties into account to recommend the next item user might be interested. Specifically the hierarchical layer includes l levels each of which consists of two symmetrical calculations. First we propose a pyramid diverse attention as shown in Fig. Following the paper Hierarchical Attention Networks for Document Classification. Towards this end we employ a feature learning layer with a hierarchical attention mechanism to jointly extract more generalized and dominant features and feature interactions.

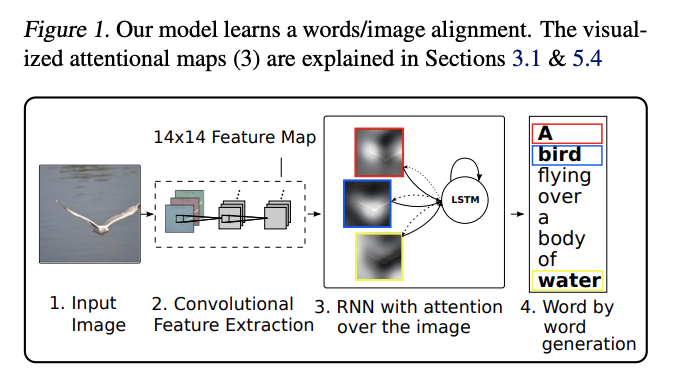

Source: link.springer.com

Source: link.springer.com

Import keras import Attention from kerasenginetopology import Layer Input from keras import backend as K from keras import initializers Hierarchical Attention Layer Implementation Implemented by Arkadipta De MIT Licensed class Hierarchical_AttentionLayer. In the following implementation therere two layers of attention network built in one at sentence level and the other at review level. It con-sists of several parts. Towards this end we employ a feature learning layer with a hierarchical attention mechanism to jointly extract more generalized and dominant features and feature interactions. The hierarchical attention model gradually suppresses irrelevant regions in an input image using a progressive attentive process over multiple CNN layers.

Source: machinelearningmastery.com

Source: machinelearningmastery.com

The bi-directional GRU with attention layer is then used to. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. Following the paper Hierarchical Attention Networks for Document Classification. 2 Hierarchical Attention Networks The overall architecture of the Hierarchical Atten-tion Network HAN is shown in Fig. The error I get.

In the following implementation therere two layers of attention network built in one at sentence level and the other at review level. In the following implementation therere two layers of attention network built in one at sentence level and the other at review level. I have also added a dense layer taking the output from GRU before feeding into attention layer. The Bi-LSTM and attention layers are applied in both word and sentence levels. Hierarchical Attention Network HAN To learn the context-based features the architecture of the HAN classifier has an embedding layer encoders and attention layers.

Source: pngset.com

Source: pngset.com

The Bi-LSTM and attention layers are applied in both word and sentence levels. First we propose a pyramid diverse attention as shown in Fig. In this work we propose a hierarchical pyramid diverse attention HPDA network which can describe diverse local patches at various scales adaptively and automatically from varying hierarchical layers. The hierarchical graph representation layer HGRL formed by stacking graph isomorphism convolution layer GIN and the proposed regularized self-attention graph pooling layer RSAGPool is used to fuse the multi-sensor information and model the spatial dependencies of graphs. Specifically the hierarchical layer includes l levels each of which consists of two symmetrical calculations.

It con-sists of several parts. In this work we propose a hierarchical pyramid diverse attention HPDA network which can describe diverse local patches at various scales adaptively and automatically from varying hierarchical layers. If one chooses to use BERT in order to create sentence embedding for each sentence then the. First we propose a pyramid diverse attention as shown in Fig. Specifically the hierarchical layer includes l levels each of which consists of two symmetrical calculations.

Source: sciencedirect.com

Source: sciencedirect.com

Following the paper Hierarchical Attention Networks for Document Classification. The coarsening operation and the refining operation. In addition a projective bilinear function is designed in meaningful second-order interaction encoder to effectively learn more fine-grained and comprehensive second-order feature interactions. Speci cally the rst attention layer learns user long-term preferences based on the historical purchased item representation while the second one outputs nal user representation. Import keras import Attention from kerasenginetopology import Layer Input from keras import backend as K from keras import initializers Hierarchical Attention Layer Implementation Implemented by Arkadipta De MIT Licensed class Hierarchical_AttentionLayer.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

In this research the context-based ELMo 55B model is used to generate the word embedding to seed the classifier. Hierarchical Attention Network HAN To learn the context-based features the architecture of the HAN classifier has an embedding layer encoders and attention layers. The bi-directional GRU with attention layer is then used to. Following the paper Hierarchical Attention Networks for Document Classification. In the following implementation therere two layers of attention network built in one at sentence level and the other at review level.

31 Task Specic Encoder. Import keras import Attention from kerasenginetopology import Layer Input from keras import backend as K from keras import initializers Hierarchical Attention Layer Implementation Implemented by Arkadipta De MIT Licensed class Hierarchical_AttentionLayer. Although the highway networks allow unimpeded information flow across layers the information stored in different layers captures temporal dynamics at different levels and will thus have impact on predicting future behaviors of. Embedding layer Word Encoder. Then we develop an hierarchical attention-based recurrent layer to model the dependencies among different levels of the hierarchical structure in a top-down fashion.

Source: researchgate.net

Source: researchgate.net

Paper we propose a novel two-layer hierarchical attention network which takes the above proper-ties into account to recommend the next item user might be interested. Although the highway networks allow unimpeded information flow across layers the information stored in different layers captures temporal dynamics at different levels and will thus have impact on predicting future behaviors of. The Bi-LSTM and attention layers are applied in both word and sentence levels. It con-sists of several parts. In this research the context-based ELMo 55B model is used to generate the word embedding to seed the classifier.

Source: sciencedirect.com

Source: sciencedirect.com

Finally we design a hybrid method which is capable of predicting the categories of. It con-sists of several parts. The bi-directional GRU with attention layer is then used to. Although the highway networks allow unimpeded information flow across layers the information stored in different layers captures temporal dynamics at different levels and will thus have impact on predicting future behaviors of. Then we develop an hierarchical attention-based recurrent layer to model the dependencies among different levels of the hierarchical structure in a top-down fashion.

Source: buomsoo-kim.github.io

Source: buomsoo-kim.github.io

The Bi-LSTM and attention layers are applied in both word and sentence levels. Keras Layer that implements an Attention mechanism with a contextquery vector for temporal data. In this research the context-based ELMo 55B model is used to generate the word embedding to seed the classifier. Key features of HAN that differentiates itself from existing approaches to document classification are 1 it exploits the hierarchical nature of text data and 2 attention mechanism is adapted for document classification. It con-sists of several parts.

This site is an open community for users to submit their favorite wallpapers on the internet, all images or pictures in this website are for personal wallpaper use only, it is stricly prohibited to use this wallpaper for commercial purposes, if you are the author and find this image is shared without your permission, please kindly raise a DMCA report to Us.

If you find this site serviceableness, please support us by sharing this posts to your own social media accounts like Facebook, Instagram and so on or you can also save this blog page with the title hierarchical attention layer by using Ctrl + D for devices a laptop with a Windows operating system or Command + D for laptops with an Apple operating system. If you use a smartphone, you can also use the drawer menu of the browser you are using. Whether it’s a Windows, Mac, iOS or Android operating system, you will still be able to bookmark this website.